NIH funding inequities: sizes, sources and solutions

What would happen if the Social Security Administration gave 11 cents to each beneficiary in Texas for every dollar given to each beneficiary in Massachusetts? What if the Federal Highway Administration allocated, per mile of interstate, 20 percent as much to Indiana as to Maryland? What if per-student funding to Mississippi from the Department of Education was only 8 percent of that to Pennsylvania?

The populace would light their torches, grab their pitchforks and march off to slay the monster. Agency officials would scramble to avoid culpability and to write new policies, however ineffectual, to demonstrate their noble intentions. Public servants would draft legislation to establish a more equitable distribution of taxpayers' dollars.

National Institutes of Health data (available to the public through the NIH RePORTER database) on research project grant funding over a 10-year period show that the scenarios above actually apply for where the NIH sends our tax dollars . Massachusetts was given about nine times more funding per capita than Texas, Maryland was awarded five times more than Indiana, and Pennsylvania got 12 times more than Mississippi.

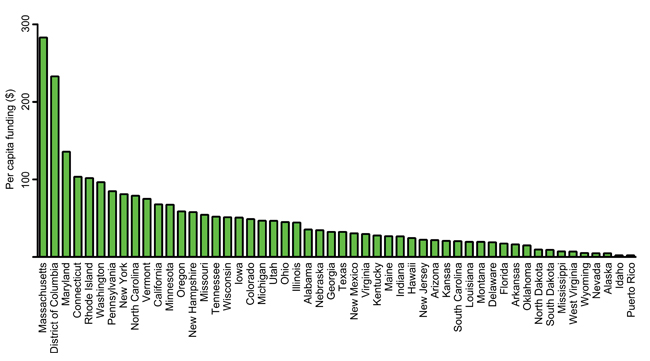

The mind-boggling disparity can be seen by plotting funding to the 50 states plus the District of Columbia and Puerto Rico (Figure 1). There was a greater than 100-fold range in per capita funding between states. The top 10 states were awarded, on average, 19 times more funding per capita than the bottom 10. Fifteen states were overfunded, and 37 were underfunded relative to the national per capita value. Nearly two-thirds of all grant dollars were allocated to one quartile of states.

The prestige factor

Through a Freedom of Information Act request for NIH award data, I discovered proximate causes of the disparities. State-by-state differences in per-application success rates, per-investigator funding rates and average award sizes each contributed to the disparities in per capita funding. For example, investigators in the top-funded quartile of states were, on average, 73 percent more likely to get each grant application funded than investigators in the bottom quartile, and when funded they received on average $106,000 more each year per award. The impacts of differences in success rates and award sizes are multiplicative, giving the geographically privileged investigators about a 230 percent advantage in funding.

The preferential allocation of funding might be justified if investigators in favored states were more productive scientifically, but this is not the case. The overfunded quartile of states was less productive (scientific publications per dollar of grant support) than each of the three underfunded quartiles. It thus seems clear that the funding process is biased strongly by the investigator's state as has been reported for investigators grouped by race and by institution.

Most bias is subconscious, and pervasive implicit biases affect the actions of individuals who are not overtly biased.

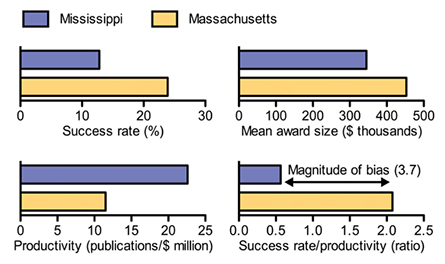

Allocations of resources also are affected by social prestige mechanisms that encompass nonmeritocratic factors such as the wealth, reputation and selectivity of institutions. Moreover, bias can occur during administrative funding decisions as well as in peer review. Small differences in reviewers' scores for preferred and nonpreferred applicants translate into large differences in likelihood of funding. We can quantify the net impact of all sources of bias by measuring the differences in success rates and award sizes versus productivity (Figure 2).

Failed policies

Officials at the NIH have been aware of these problems for more than a quarter-century. In response to congressional concerns about differences in funding for research and scientific education to states, the NIH and other federal agencies implemented programs intended to promote a more equitable distribution of funding. However, a congressionally mandated study of the programs since their inception revealed they have not reached this goal. Grant application success rates of program-targeted states have remained consistently lower than those of other states, and the aggregate share of funding to advantaged and disadvantaged states has not changed significantly.

in descending order by per capita funding. Data are mean of values for fiscal years 2004 to 2013 (1).

Why have policies intended to promote a more equitable distribution of federal grant funding failed? The answer is obvious. None of the programs address directly the proximate causes of the regional disparities in funding, such as the strong biases in NIH grant application success rates and award sizes for investigators grouped by state (see Figure 2).

The talent to carry out research resides throughout the U.S., and the value of a scientist to the nation's research enterprise is largely independent of location. There is no scientific basis for the differences in success rates and award sizes among states.

Reducing bias and discrimination is the right thing to do. The NIH should establish parity of research project grant application success rates and mean award sizes among states. The former could be achieved by the next round of funding decisions; the latter could be phased in over about five years without affecting any active grants. Mechanisms to do so can be understood if we consider the NIH process and the points at which bias can occur.

Points of bias

Each grant application is assigned to a scientific review group, known as an SRG, composed primarily of nonfederal scientists. The SRG generally has about 25 peer reviewers and evaluates about 60 to 70 grant proposals per cycle. There are three cycles per year.In most cases, each application is assigned to two or three primary reviewers who evaluate its scientific merit. They consider five criteria and assign a single, aggregate score for overall scientific impact (1 is best; 9 is worst). Therein lies the first opportunity for bias. Moreover, with two or three reviewers evaluating each application, the biases of a single reviewer can affect greatly the final impact score.

The SRG then convenes to discuss the applications and refine their impact scores. Only those with stronger scores are discussed — often for as little as 15 minutes each. The reviewers can revise their impact scores, and other SRG members then submit anonymously an impact score for the application; unless they publicly state their intent to do otherwise, they must assign a score within the range of the reviewers'.

Only about 15 percent of NIH research project grant applications are funded per cycle, so a successful proposal must be championed by its reviewers and endorsed by the panel. One negative comment can sink an application. Panel discussions and score refinements therefore provide a second opportunity for bias, and many who have served on SRGs recognize that social prestige mechanisms have a role in the process.

Once overall impact scores are available, NIH officials pool the scores from three cycles of the SRG and rank the proposals by score. From that ranking, applications are given a percentile priority score, which spreads the scores over a continuum. Biases in peer review and at the SRG affect these priority score distributions.

Officials in NIH institutes use priority scores to make funding decisions. The fraction of applications that can be funded (the priority score payline) varies among institutes according to the number of proposals being considered and the amount of money available. Paylines are not strict cutoff points; officials can fund or deny funding out of priority-score order, which provides a third opportunity for bias. Evidence for this can be found in published data: When institutions were placed in bins based on their amounts of NIH funding, the mean success rate for bin 1 (the 30 top-funded institutions) was up to 66 percent higher than those of the next three bins — even though there were no significant differences among impact scores from peer review.

Once a decision has been made to fund a project, NIH officials often modify the award size. For example, the total budget of my R01 grant (my sole source of research funding) was cut administratively by 36 percent, relative to the amount of support recommended by the SRG. The administrative decisions to modify the SRG-recommended budgets provide a fourth opportunity for bias.

The impacts of even minor biases at each of the four consecutive opportunities for bias can multiply exponentially through the process. However, we do not need to quantify each type of bias to measure the net impact (see Figure 2) or to take corrective action.

It is sufficient to recognize that implicit biases and social prestige mechanisms affect allocations of funding to states, that talented scientists are found in every state and that the NIH is obligated to distribute federal research dollars equitably.

Practical solutions

The funding process also provides three straightforward ways to remediate the biases that occur during peer review and administrative decisions.

First, to address scoring bias in peer review, the NIH should correct for its effects on priority score distributions. The simplest way to do this would be to assign percentile priority scores for applications grouped by state, using the entire cohort of applications from each state and adjusting, if necessary, for differences between SRGs. For example, applications from Ohio would be rank-ordered and assigned percentile priority scores relative to other applications from Ohio. This would ensure that the distribution of priority scores is similar among states: each state would have the same fraction of applications that fall below the payline and get funded.

Second, to address decision bias in administrative review, NIH officials no longer should be allowed to fund applications or deny funding out of priority-score rank order. Paylines would still vary among institutes, but all applications of a given type within the auspices of a given institute would be treated fairly with regard to the decision whether to fund them.

Third, to address biases in award sizes, the NIH should establish interstate parity of mean total award size for all research project grants. This would be easy, because institute officials routinely modify award budgets. The sizes of individual awards still could vary greatly, but there no longer would be regional favoritism in dollars per grant overall.

While these approaches would help remediate regional funding bias, competition for grant support would remain fierce, and the vast majority of applications would remain unfunded. The process still would fund only projects highly rated by peer review. However, the diversity of perspectives, tools and creative ideas would increase, along with the return on taxpayers' investments.

States with high population densities of scientists, which is arguably a legacy of unbalanced allocations made in the past, would continue to secure a disproportionate share of NIH grant funding. The majority of funding for biomedical research and scientific education still would be concentrated in a minority of states. But at least scientists would be allowed to compete on equal footing with scientists in other states for the grant dollars that taxpayers put into the system.

Enjoy reading ASBMB Today?

Become a member to receive the print edition four times a year and the digital edition monthly.

Learn moreGet the latest from ASBMB Today

Enter your email address, and we’ll send you a weekly email with recent articles, interviews and more.

Latest in Opinions

Opinions highlights or most popular articles

Women’s health cannot leave rare diseases behind

A physician living with lymphangioleiomyomatosis and a basic scientist explain why patient-driven, trial-ready research is essential to turning momentum into meaningful progress.

Making my spicy brain work for me

Researcher Reid Blanchett reflects on her journey navigating mental health struggles through graduate school. She found a new path in bioinformatics, proving that science can be flexible, forgiving and full of second chances.

The tortoise wins: How slowing down saved my Ph.D.

Graduate student Amy Bounds reflects on how slowing down in the lab not only improved her relationship with work but also made her a more productive scientist.

How pediatric cataracts shaped my scientific journey

Undergraduate student Grace Jones shares how she transformed her childhood cataract diagnosis into a scientific purpose. She explores how biochemistry can bring a clearer vision to others, and how personal history can shape discovery.

Debugging my code and teaching with ChatGPT

AI tools like ChatGPT have changed the way an assistant professor teaches and does research. But, he asserts that real growth still comes from struggle, and educators must help students use AI wisely — as scaffolds, not shortcuts.

AI in the lab: The power of smarter questions

An assistant professor discusses AI's evolution from a buzzword to a trusted research partner. It helps streamline reviews, troubleshoot code, save time and spark ideas, but its success relies on combining AI with expertise and critical thinking.